Executive Summary

The prevailing question occupying the C-suite is no longer if Generative AI will impact their business, but how to harness it for strategic advantage. Leaders are seeking a bridge from the generalized, public-facing capabilities of Large Language Models (LLMs) to the specific, high-value needs of their enterprise. The primary obstacles are clear: the risk of factual inaccuracies (“hallucinations”) and the necessity of grounding AI responses in the company’s private, proprietary data.

The definitive answer to this challenge is an architecture known as Retrieval-Augmented Generation (RAG). RAG is a practical, powerful framework that synergizes the reasoning and language capabilities of an LLM with the factual accuracy of a curated, internal knowledge base. It is the key to transforming Generative AI from a fascinating novelty into a reliable, enterprise-grade asset.

This analysis provides a strategic framework for leaders to evaluate and implement RAG. We will demystify the underlying technology, present a portfolio of high-value investment use cases across the enterprise, and outline a pragmatic, phased roadmap for building and scaling a RAG system. For organizations whose competitive advantage is derived from their unique, accumulated knowledge, adopting a RAG strategy is not merely an option—it is a strategic imperative for the coming decade.

Chapter 1: The Enterprise Knowledge Dilemma: From Data-Rich to Insight-Poor

The modern enterprise is drowning in a sea of its own unstructured data. Decades of valuable, hard-won knowledge is siloed across countless systems: technical documentation in Confluence, legal precedents in SharePoint, product specifications in PDF reports, customer support solutions in Zendesk tickets, and strategic insights in meeting transcripts. While this information is technically stored, it is functionally inaccessible. It represents a vast, latent asset that most organizations have failed to monetize.

This “data-rich, insight-poor” paradox creates significant organizational friction:

- Expert Time is Wasted: Highly skilled (and highly compensated) engineers, lawyers, and support specialists spend an inordinate amount of time searching for information rather than applying their expertise. Knowledge transfer from senior to junior employees is inefficient and slow.

- Decision Velocity is Impeded: Strategic decisions are delayed as teams struggle to find the relevant data and precedents to support their initiatives.

- Corporate Memory is Fragile: When an expert leaves the company, their accumulated knowledge often leaves with them, forcing the organization to relearn expensive lessons.

Traditional keyword search has proven inadequate for this challenge. It lacks semantic understanding and cannot synthesize information from multiple sources. What is needed is a system that can understand a user’s intent, retrieve contextually relevant information from across the entire knowledge base, and synthesize it into a direct, trustworthy answer. This is the precise problem that RAG is designed to solve.

Chapter 2: RAG Demystified: The Architectural Blueprint

At its core, a RAG system functions like a brilliant, expert researcher with an open book. Instead of relying on its own fallible memory (the LLM’s training data), it is given the precise, relevant documents needed to answer a question just-in-time. This “open-book” approach dramatically improves accuracy and virtually eliminates hallucinations.

The architecture consists of three core stages:

1. Ingestion & Indexing (The Library)

This is the offline process where your company’s knowledge is prepared for the AI.

- Data Loading: The system connects to your various data sources (Confluence, SharePoint, file servers, etc.) and loads the raw documents (PDFs, Word docs, web pages).

- Chunking: Each document is broken down into smaller, manageable “chunks” of text—typically a few paragraphs long. This is critical because it allows the system to find highly specific passages of text instead of entire, lengthy documents.

- Embedding: Each chunk is then fed into an embedding model, a specialized neural network that converts the text into a numerical vector (a list of numbers). This vector represents the semantic meaning of the text. Chunks with similar meanings will have mathematically similar vectors.

- Indexing: These vectors and their corresponding text chunks are stored in a vector database, a specialized database optimized for incredibly fast similarity searches. This process creates a searchable, semantic index of your entire knowledge base.

Analogy: This stage is akin to building a hyper-intelligent card catalog for a vast library. Instead of just indexing by title or author, every paragraph of every book is indexed based on its meaning.

2. Retrieval (The Research Assistant)

This is what happens in real-time when a user asks a question.

- Query Embedding: The user’s question (e.g., “What was our material stress tolerance for Project Phoenix?”) is also converted into a vector using the same embedding model.

- Semantic Search: The system takes the query vector and uses the vector database to find the text chunks with the most similar vectors. This is not a keyword search; it’s a semantic search based on meaning. It will find relevant passages even if they don’t contain the exact words used in the question.

- Context Formulation: The system retrieves the top K most relevant text chunks (e.g., the top 5). This collection of relevant text becomes the “context” for the final step.

3. Augmentation & Generation (The Expert Synthesizer)

This is the final stage where the LLM is utilized.

- Prompt Augmentation: The system constructs a new, detailed prompt for the LLM. This prompt essentially says: “You are an expert assistant. Answer the following User Question based only on the Provided Context. Do not use any other information. If the answer is not in the context, say you don’t know.” The prompt then includes the original user question and the retrieved text chunks.

- Generation: The LLM receives this augmented prompt and generates a response. Because it has been given the exact, factual information it needs, the answer it produces is grounded in your company’s data, accurate, and contextually relevant.

This elegant, three-stage process transforms the LLM from a source of general knowledge into a tailored expert on your specific business.

Chapter 3: The RAG Investment Portfolio: Strategic Use Cases

For a leadership team, evaluating RAG requires framing it as an investment portfolio. Capital should be allocated to use cases that provide the highest strategic return, whether through productivity gains, risk mitigation, or new revenue streams.

Investment Thesis 1: Operational Efficiency & Expert Augmentation

- Problem: High-value experts are bogged down by low-value information retrieval tasks.

- RAG Solution: An internal “expert-in-a-box” that provides instant, synthesized answers from a vast knowledge base.

- Value Proposition: Massively accelerates research, reduces onboarding time for new hires, and frees up senior talent to focus on strategic work.

- Key Risks: User adoption, quality of the underlying knowledge base.

Use Cases:

- Engineering & R&D: A RAG system trained on all past project documentation, technical reports, and codebases. An engineer can ask, “What were the thermal performance trade-offs we considered for the Gen-3 sensor array?” and receive a synthesized summary from a dozen reports, saving days of research.

- Customer Support: A RAG for the support team, connected to technical manuals, past support tickets, and community forums. An agent can ask, “How do I solve error code 501 for an Enterprise customer with the Salesforce integration?” and get an instant, step-by-step solution, complete with links to the source documents. This drastically reduces response times and improves customer satisfaction.

Investment Thesis 2: Risk Mitigation & Compliance

- Problem: Navigating complex regulatory requirements and legal obligations is time-consuming and fraught with risk.

- RAG Solution: A specialized RAG that provides precise answers from a corpus of legal, regulatory, and compliance documents.

- Value Proposition: Mitigates compliance risks by ensuring consistent, accurate information is used. Increases the efficiency of legal and compliance teams.

- Key Risks: Ensuring the knowledge base is always up-to-date with the latest regulations.

Use Cases:

- Legal & Contract Analysis: A RAG trained on millions of pages of case law and all of the company’s past contracts. A lawyer can ask, “Find all precedents related to intellectual property clauses in UK software licensing agreements from the last 5 years,” or “Which of our active contracts contain a force majeure clause that covers pandemics?”

- Financial & Regulatory Compliance: A RAG for a financial institution, trained on SEC filings, anti-money laundering (AML) regulations, and internal audit policies. An analyst can ask, “What are the reporting requirements for a transaction over $10,000 involving a politically exposed person?” and receive an immediate, accurate answer with citations.

Investment Thesis 3: Revenue Generation & Product Innovation

- Problem: Customers and internal teams struggle to find the right information to make purchasing decisions or build new products.

- RAG Solution: An externally-facing or product-integrated RAG that acts as an intelligent guide.

- Value Proposition: Improves customer experience, increases conversion rates, and creates new, AI-powered product features that serve as a competitive moat.

- Key Risks: Latency, scalability, and ensuring a seamless user experience.

Use Cases:

- AI-Powered Product Features: A B2B SaaS company can integrate a RAG into their application. Instead of a simple search bar, users can ask complex questions about how to use the product, and the RAG provides answers directly from the help documentation, tutorials, and API guides. This becomes a core, value-adding feature of the product itself.

- Sales & Marketing Enablement: A RAG for the sales team, trained on all product marketing materials, case studies, and competitor analyses. A sales representative preparing for a call can ask, “Give me three case studies of manufacturing clients in Germany who saved money using our supply chain module, and summarize the key talking points for each.”

Chapter 4: A Phased Roadmap for RAG Implementation

A successful RAG implementation is not a monolithic IT project; it’s an iterative journey that delivers value at each stage.

Phase 1: Proof of Concept (The Lab) - (Weeks 1-4)

- Goal: Prove technical feasibility and demonstrate tangible value to an executive sponsor on a small, controlled scale.

- Actions:

- Select a High-Value, Bounded Knowledge Base: Do not try to boil the ocean. Choose a single, well-maintained set of documents, such as the technical documentation for one specific product.

- Define a Narrow Use Case: Focus on answering a small set of important questions (e.g., “How do I configure the API for X?”).

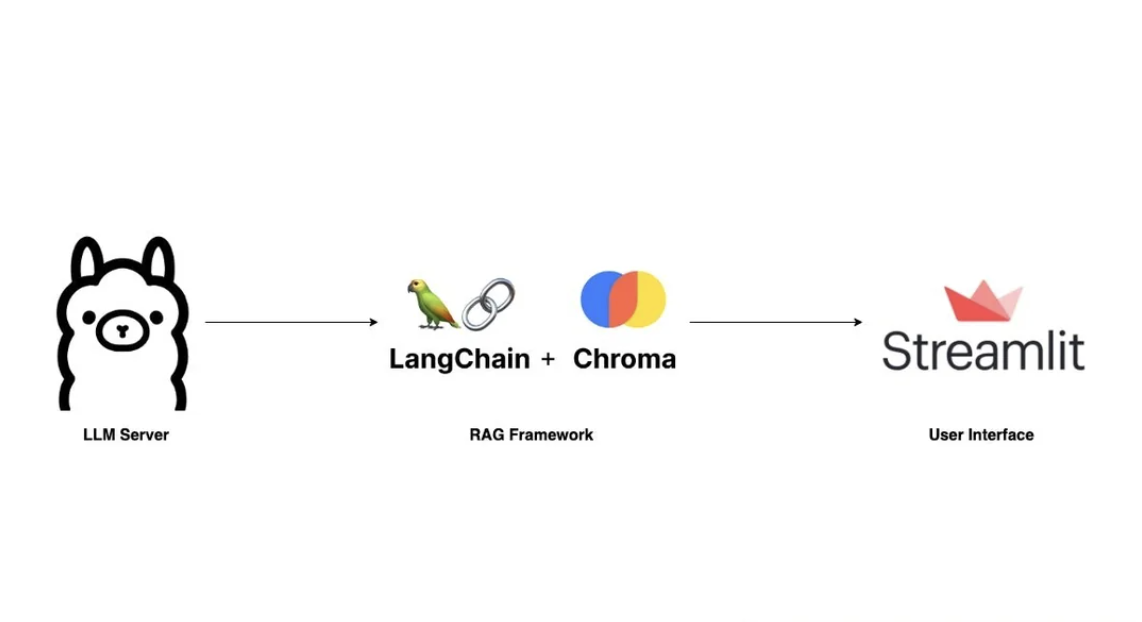

- Use Lightweight Tools: Build a quick demo using open-source libraries like LangChain or LlamaIndex, a local vector database like ChromaDB, and an open-source LLM via Ollama or a simple API call to a provider like OpenAI.

- Outcome: A working demo that can answer a handful of questions correctly, proving to leadership that the technology is real and has potential.

Phase 2: Pilot Program (The First Deployment) - (Quarter 1)

- Goal: Solve a real business problem for a small, well-defined group of “friendly” internal users.

- Actions:

- Productionize the PoC: Move from local scripts to a more robust, cloud-based architecture. Select an enterprise-grade vector database (e.g., Pinecone, Weaviate) and a reliable LLM provider.

- Select a Pilot Team: Choose a small team that is feeling a significant pain point, such as a 5-10 person customer support team.

- Build a Simple UI: Create a basic but usable web interface for the team to ask questions.

- Gather Intensive Feedback: Work closely with the pilot team to understand where the system succeeds and fails. Focus on improving the relevance of retrieved documents and the accuracy of the final answers.

- Outcome: A functional internal tool that is actively solving a real problem and providing measurable value (e.g., reduced ticket response time). You will also have a rich set of user feedback to guide future development.

Phase 3: Enterprise Scale-Up (The Factory) - (Quarters 2-4)

- Goal: Expand the RAG system into a reliable, secure, enterprise-wide platform.

- Actions:

- Establish Governance: Create a formal process for identifying, validating, and adding new knowledge sources to the system.

- Invest in Observability: Implement tools to monitor the RAG pipeline end-to-end. Track query latency, retrieval relevance, and user feedback on answer quality. Platforms like LangSmith or Arize AI are built for this.

- Build a Platform: Abstract the RAG pipeline into a centralized service that different teams and applications can use.

- Execute the Roadmap: Begin onboarding new departments and use cases from your investment portfolio, one by one.

- Outcome: A scalable, enterprise-wide knowledge platform that becomes a core part of the company’s infrastructure and a source of durable competitive advantage.

Chapter 5: Conclusion - RAG as a Strategic Imperative

Generative AI represents a fundamental shift in how we interact with information. However, generic, public-facing LLMs are a commodity. An organization’s true, defensible advantage lies in its unique, proprietary knowledge accumulated over years of operation.

Retrieval-Augmented Generation is the critical bridge between the commodity of AI and the unique value of your enterprise data. It is the practical, secure, and powerful mechanism for transforming your company’s inert archives of knowledge into a dynamic, interactive expert system. By grounding the power of LLMs in the factual reality of your own documents, RAG mitigates the risks of hallucination and creates a tool that is not only intelligent but also trustworthy.

The question for leaders is not whether to experiment with Generative AI, but how to invest in it for strategic return. By viewing RAG through the lens of an investment portfolio and executing a phased, value-driven roadmap, organizations can unlock one of their most significant and underutilized assets—their collective intelligence.